Most agents can respond to a prompt, but ask them to click a button in your enterprise software, and suddenly its limitations show.

In the age of generative AI, everyone’s racing to build agents that don’t just respond to prompts, but actually do things. Send an email. Update a record. Navigate a dashboard. The dream, right? An intelligent assistant that uses your apps just like a human would, all clicks, scrolls, and savvy shortcuts.

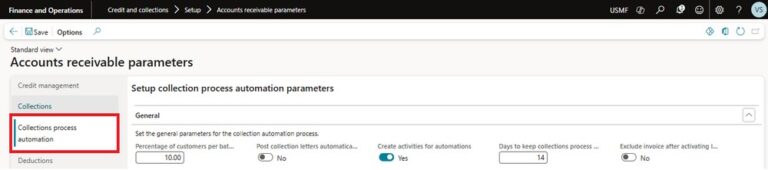

But here’s the catch: most AI Agents fall apart the second they touch a graphical user interface (GUI). Why? Because clicking around a screen in the real world isn’t as easy as it sounds. Enterprise software is dense, dynamic, and often frustrating for humans, let alone for large language models (LLMs) trying to drive with verbal or natural language alone.

That’s where Computer Use Agents (CUAs) come in and why Salesforce AI is using reinforcement learning to improve this technology.

Most LLM-based agents are built for language. They understand prompts and can answer questions; however, their limitation shows when asking them to perform a multi-step task inside a real application.

Consider this scenario: when navigating a CRM system, a human doesn’t just “know” what to do. They see the screen, recognize visual cues, remember past steps, make decisions in real-time, and follow workflows that aren’t always obvious. An AI Agent replicating that behavior requires more than text prediction. It requires embodied intelligence or an understanding of it’s environment.

Most generic agents fail for two reasons:

1. Ambiguous Planning

There’s rarely one “right” way to complete a task. Should the agent click the blue button or use the dropdown in your CRM? Should it search or scroll? Many possible sequences might work, but some are faster, safer, or more aligned with business logic. Choosing wisely, without hindsight, is tough. It’s the kind of decision-making humans do without thinking, but for AI it’s a high-stakes guessing game.

2. Visual Grounding

Most UIs aren’t static or simple. Buttons move. Screens resize. Elements overlap. The agent has to know exactly where to click, and clicking the wrong place can crash a workflow. It’s like navigating a maze where the walls keep moving.

To tackle these challenges, our Salesforce Research team introduced GTA1 (GUI Test-time Agent 1), a cutting-edge, two-part architecture designed to handle both intelligent planning and precise visual grounding across dynamic, real-world interfaces.

At its core, GTA1 blends two essential innovations:

- Test-Time Scaling (Smarter Planning)

Rather than committing to a single action, GTA1 samples multiple potential next steps. It then evaluates them using a multimodal judge model (which sees and understands both the screen and task context) to select the best move — all at runtime.

This adaptive planning system allows GTA1 to avoid early mistakes and adjust course on the fly, without requiring lookahead or brittle hardcoded sequences.

- RL-Based Grounding (Better Clicking)

Instead of trying to predict the exact center of a button — like many supervised models do — GTA1 uses reinforcement learning to click anywhere inside the correct target. The reward? Landing inside the clickable zone. That’s it.

This simple but powerful change improves flexibility and generalization, especially in high-resolution, cluttered UIs where “center” isn’t always reliable. It also takes away the need for verbose “reasoning” before clicking — something our research shows often hurts grounding performance in static environments.

The Results: Smoother Clicks, Smarter Actions

GTA1 sets new standards across industry benchmarks — proving that scalable, high-performing GUI agents are no longer theoretical.

📊 ScreenSpot-Pro (professional enterprise UIs):

GTA1-7B achieves 50.1%, outperforming many models with 10x the parameters.

GTA1-72B scores 94.8%, rivaling top proprietary systems.

💻 OSWorld-G (Linux environments):

GTA1-7B leads with 67.7%, excelling in text matching, element recognition, layout understanding, and fine-grained manipulation.

On the full OSWorld benchmark, GTA1-7B completes 53.1% of real-world tasks — beating OpenAI’s CUA o3 (42.9%) in half the steps (100 vs. 200).

And GTA1’s advantages compound when scaled. With larger models and more candidate actions (via test-time scaling), performance continues to climb — without bloating wall-clock time thanks to concurrent sampling.

Computer Use Agents like GTA1 are built to do what most agents can’t: operate software in the wild. That means they can…

- Complete actual workflows across CRM, ERP, or productivity tools, no APIs required

- Adapt to UI changes, variations, or user-specific layouts

- Learn from previous interactions to improve accuracy and speed

- Respect enterprise constraints, policies, and data access rules

- For Salesforce, this means a future where agents can do more than summarize records or draft emails. They can take action, schedule a meeting, update a pipeline, create a dashboard — all while grounded in our platform’s security and trust.

Trust and Control Still Matter. Even the smartest agent needs a supervisor. At Salesforce, we’re not just building agents — we’re building systems with governance, transparency, and human oversight built in. That’s why every CUA we build is designed with:

- Judgment models for safer decision-making

- Zero-copy data access to minimize risk and maximize context

- Observability tools so admins and users can monitor what agents do — and how well they’re doing it

- Trust Layer protections to enforce role-based access, compliance, and user intent at every click

The next generation of AI won’t live in chat windows — it’ll live in your software. Agents that work across tabs. That understands your workflows. That actually gets things done.

GTA1 proves it’s possible. It’s not a demo. It’s not a dream. It’s a foundation for scalable, trustworthy AI that clicks, scrolls, and performs — just like a great teammate would.