Github Code: https://github.com/JiuhaiChen/BLIP3o

Models: https://huggingface.co/BLIP3o/BLIP3o-Model

Demo: https://huggingface.co/spaces/BLIP3o/blip-3o

OpenAI’s GPT-4o has demonstrated state-of-the-art performance in image understanding, generation and editing tasks. Emerging hypotheses of its architecture suggest a hybrid pipeline structured as:

Tokens → [Autoregressive Model] → [Diffusion Model] → Image Pixels

This ndicates that autoregressive and diffusion models may be jointly leveraged to combine the strengths of both modules. Motivated by this hybrid design, we adopt an autoregressive + diffusion framework in our study.

In the autoregressive + diffusion framework, the autoregressive model produces continuous intermediate visual features intended to approximate ground-truth image representations, raising two key questions.

First, what should be used as the ground-truth embeddings: should we use a VAE or CLIP to encode images into continuous features?

Second, once the autoregressive model generates visual features, how do we align them with the ground-truth image features: via a simple MSE loss, or by employing a diffusion-based approach?

We consider two image encoder-decoder paradigms:

VAE: encode the image into low-level pixel features and offer better reconstruction

Quality. However VAE-based encoders tend to produce a longer sequence of latent embeddings for higher-resolution inputs, which increases the computational burden in the training procedure.

CLIP + Diffusion: encode the image into high level semantic features and reconstruct into a real image by diffusion model. Specifically, it uses CLIP to encode images into continuous visual embeddings and reconstruct them via a diffusion model. During training, the diffusion decoder is fine-tuned to use the visual embeddings from CLIP as conditions to recover the original image from Gaussian noise, while the CLIP remains frozen. Each image regardless of its resolution can be encoded into a fixed length of 64 continuous vectors, providing both compact and semantically rich latent embeddings, but requires additional training to adapt the diffusion models to various CLIP encoders.

For the predicted visual features from autoregressive model, and ground truth image features from VAE or CLIP, we consider two training objectives:

MSE: we compute MSE loss between predicted and ground truth image features.

Flow Matching: Conditioning the predicted visual features from autoregressive model, we use flow matching loss to train the diffusion transformer to predict ground-truth CLIP or VAE features.

The combination of different image encoder–decoder architectures and training objectives gives rise to a range of design choices for image generation models:

CLIP + MSE: Following Emu2 and Seed-X, we use CLIP to encode images into 64 fixed-length semantic-rich visual embeddings. The autoregressive model is trained to minimize the Mean Squared Error (MSE) loss between the predicted visual features and the ground-truth CLIP embedding X. During inference, given a text prompt, the autoregressive model predicts the visual features, which is subsequently passed to a diffusion-based visual decoder to generate the real image.

CLIP + Flow Matching: As an alternative to MSE loss, we employ flow matching loss to train the model to predict ground-truth CLIP embeddings. Given a prompt, the autoregressive model generates a sequence of visual features. These features are used as conditions to guide the diffusion process, yielding a predicted CLIP embedding to approximate the ground-truth CLIP features. In essence, the inference pipeline involves two diffusion stages: the first uses the conditioning visual features from autoregressive model to iteratively denoise into CLIP embeddings. And the second converts these CLIP embeddings into real images by diffusion-based visual decoder. This approach enables stochastic sampling at the first stage, allowing for greater diversity in image generation.

VAE + Flow Matching: We can also use flow matching loss to predict the ground truth VAE features, which is similar to MetaQuery. At inference time, given a prompt, the autoregressive model produces visual features. Then, conditioning on and iteratively removing noise at each step, the real images are generated by the VAE decoder.

The following ablation demonstrates CLIP + Flow Matching as the most effective design choice.

In our study, we find that when integrating image generation into a unified model, autoregressive models more effectively learn the semantic-level features (CLIP) compared to pixel-level features (VAE). And adopting flow matching as the training objective better captures the underlying image distribution, resulting in greater sample diversity and enhanced visual quality.

We adopt a two‐stage diffusion process rather than a single stage,

- Stage 1: The autoregressive model and diffusion transformer produce only the high-level semantic embeddings.

- Stage 2: A lightweight diffusion decoder fills in the low-level details.

This split not only maintains quality but also makes the overall training process much more efficient.

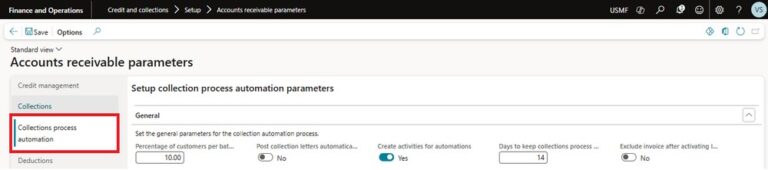

By using CLIP encoder, image understanding and image generation share the same semantic space, effectively unifying these two tasks. We use sequential training (late fusion) instead of joint training (early fusion) for the following reason:

It lets us freeze the autoregressive backbone and maintain the image understanding capability.

We can dedicate all training capacity to image generation, avoiding any inter-task effects in joint training.

Based on our findings, we adopt CLIP + Flow Matching and sequential training to develop our own state-of-the-art unified multimodal model BLIP3-o.

For 8B model, we combine around 25 million open-source data with an additional 30 million proprietary images. All image captions are generated by Qwen2.5-VL-7B-Instruct, yielding detailed descriptions with an average length of 120 tokens. To improve generalization to varying prompt lengths, we also include around 10% (6 million) shorter captions with around 20 tokens from.

For the fully open-source 4B model, we use 25 million publicly available images, each paired with the same detailed captions. We also mix in around 10% (3 million) short captions sourced from.

In the instruction tuning stage, we further prompt GPT-4o to generate 60k high quality data. The model can rapidly adapt to GPT-4o style, enhancing both prompt alignment and visual quality. The model learns more effectively from AI-generated images than from real images.

To support the research community, we release 25 million detailed captions, 3 million short captions and 60k instruction data.

We find that the model can rapidly adapt to GPT-4o style, enhancing both prompt alignment and visual quality.

In summary, we have presented the first systematic exploration of hybrid autoregressive and diffusion architectures for unified multimodal modeling, evaluating three critical aspects: image representation (CLIP vs. VAE features), training objective (Flow Matching vs. MSE), and training strategy (joint vs. sequential). Our experiments demonstrate that CLIP embeddings paired with a flow matching loss deliver both faster training efficiency and higher quality outputs. Building on these insights, we introduce BLIP3-o, a family of state-of-the-art unified models enhanced with a 60k instruction tuning dataset BLIP3o-60k that substantially improves prompt alignment and visual aesthetics. We are actively working on applications for the unified model, including iterative image editing, visual dialogue, and step-by-step visual reasoning.

Welcome to join our discussion if you have any questions: https://discord.gg/SsVYdV84bw

● Paper:https://arxiv.org/abs/2505.09568v1

● Github:https://github.com/JiuhaiChen/BLIP3o

● Dataset:

Pretrain → https://huggingface.co/datasets/BLIP3o/BLIP3o-Pretrain-Long-Caption

Instruction tuning → https://huggingface.co/datasets/BLIP3o/BLIP3o-60k